Thank you to all who contributed to this thread. The responses were quite impressive, and showed some creative, innovative use of statistics.

With all things equal between the 2 batters including the cost of outs, the real question here is are player A’s extra hits/higher batting average more valuable than player B’s extra walks and higher isolated power. A few people here have utilized wOBA to compare the hitters, and that is a great method. But it’s a little abstract for some folks, who want to know exactly how the data is calculated, so I’m going to go old school, and use linear weights (lwts).

I know this is really elementary for a lot of you, and I apologize if it’s repetitive. But I think explaining how linear weights works will help some people here understand why

these 2 players have contributed the same amount of offense. They are equally as productive.

My favorite mathematician is

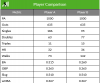

Andrey Markov, who was not only brilliant, but also sexy as hell. Markov is best known for Markovian Chains, which were based on the study of the probability of mutually dependent events. To simplify, an event which is influenced by past events and will influence future events would be considered Markovian. For example, the stock market would be considered Markovian, as would a blackjack game, while a coin flip would not. Baseball certainly is Markovian, in that what happens in a current at bat is influenced by past events, just as future events are influenced by present events. What I mean is that a single with two outs and no runners on base has a different value than a single with the bases loaded and no outs, with the events leading up to those respective singles being the difference, and the subsequent events also influencing the total run result. Clearly, all singles are not equal. So how do we determine with any degree of accuracy the “value” of a single (or the result of any at bat)? The following chart will help answer that question. The chart gives the “net expected run value” for each of the 24 different situations a batter can see in an at bat, from none on, none out, to bases loaded, two outs. The run values are calculated by looking at each at bat over the course of multiple seasons and determining how many runs score subsequent to each of the situations. These totals are then averaged to give the “net expected run values” for each situation.

The following chart uses data from 2015 - 2018, and while the run expectancy numbers do change depending on run environment, the value of these 2 players will remain equal in all run environments (I couldn't resist including a picture of Markov and those sext math eyes!):

View attachment 60660

This may be easier to understand when looking at the first batter of an inning--with no outs and none on, an average team will score 0.492 runs. Multiplying this by 9 innings in a game yields just over 4.4 runs, which is the average number of runs scored per game by Major League Baseball teams from 2015-18.

It’s worth taking a minute to look at the chart, as it will show the value of an out. For example, if you have a runner on 1st and no outs, the average runs scored is .859. If the team successfully bunts him to 2nd base, you now have a runner on 2nd and 1 out, which will on average yield .678 runs. That bunt ‘cost’ the team almost 2/10 of a run. And while there certainly are situations where the bunt is a good strategy, in most it is not. Outs are incredibly valuable in baseball. But I digress.

Back to linear weights. To calculate linear weights, you take the change in expected run situation for all offensive events and divide them by the number of times that event occurred. For example, if you took the total positive change in expected runs generated by all singles hit in a season/multiple seasons and divided that by the total number of singles, you would get the average value of a single. Doing the same thing for doubles, triples, home runs, outs, etc., gives you the average value of all those offensive events. If you were to total all the hits, walks, hit by pitch, stolen bases and caught stealing, and outs over the course of a season, you would arrive at a sum close to zero (some plays, such as passed balls or balks are not a product of offense and are thus not accounted for in linear weights). Simply multiplying a batter's singles, doubles, outs, etc., by the calculated average value of those events will, therefore, give you the total number of runs a player has contributed above or below an average player.

The event values are as follows:

• singles +0.47

• doubles +0.76

• triples +1.03

• home runs +1.40

• walks/hpb +0.33

• intentional walks +0.185

• stolen bases +0.193

• caught stealing -0.437

• outs -0.271

Because we know the cost of player A's and player B's outs are identical, we can eliminate them from this comparison. A sample season for both players with 600 plate appearances would be:

| Player |

PA |

AB |

H |

1B |

2B |

3B |

HR |

BB/HPB |

BA |

OBP |

SLG |

| A |

600 |

556 |

175 |

115 |

34 |

1 |

25 |

44 |

0.315 |

0.365 |

0.515 |

| B |

600 |

515 |

134 |

74 |

24 |

1 |

35 |

85 |

0.260 |

0.365 |

0.515 |

Multiplying each players hit and walk/hbp contribution by the event values above gives us player A at 130.4 and player B at 131.1, less than 1 run contributed apart. They are equally productive. You can try different numbers that create the same slash lines, and you will always get the same result. Remember, these players batted in the same situations with the same teammates in the same parks, etc., etc.

I hope this all makes sense. My wife often says I'm effectively illiterate in both Spanish and English. If you have any questions, please don't hesitate to ask.

Thanks for the nice distraction. Maybe next time we can do wins vs runs, or the role of luck in sports